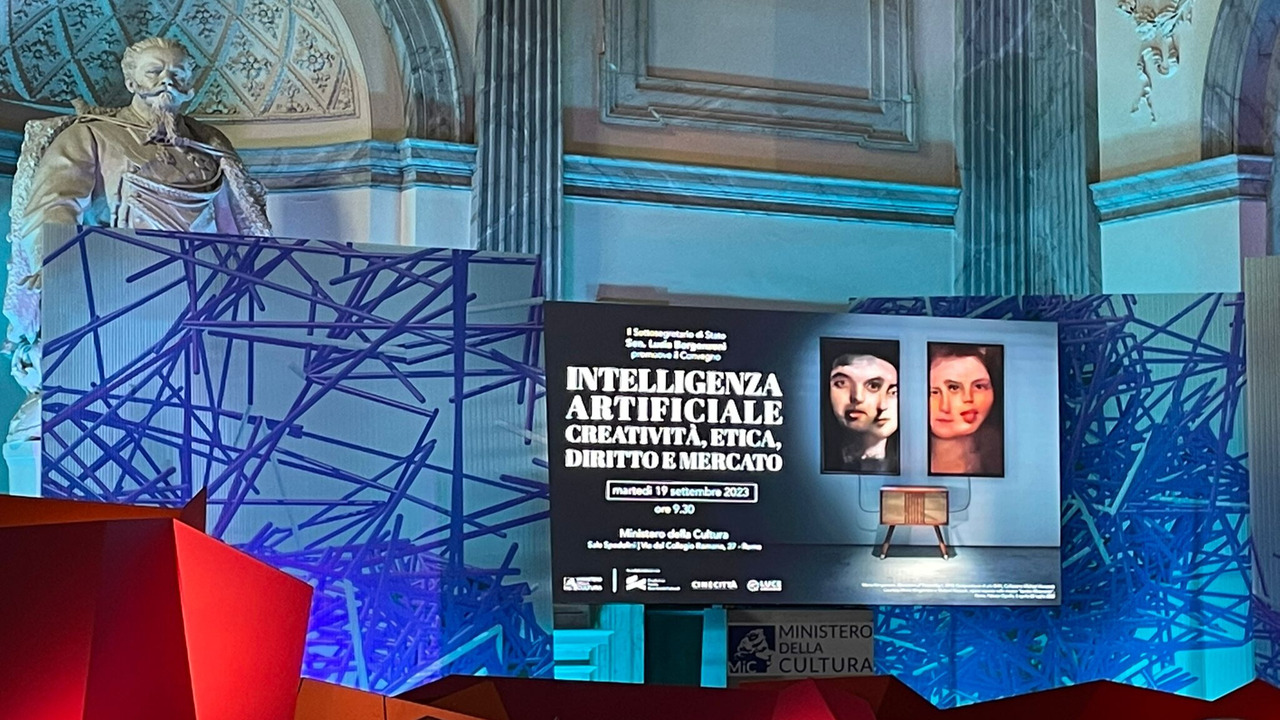

The doors of the Ministry of Culture open – both physically and metaphorically – to the future, on the occasion of the conference “Intelligenza Artificiale: creatività, etica, diritto e mercato” promoted by Undersecretary of State Lucia Borgonzoni and held yesterday at the Spadolini Hall of the Ministry of Culture. An event whose guiding principle of thought and action, as stated by Borgonzoni herself, was “the centrality of humans over machines, to protect human creativity and intellectual property as well as the works themselves.” Prominent names were present for a day filled with content and points for reflection: from copyright to the cultural contribution of AI in the job market, from ethical implications to risks for creativity.

Undersecretary Borgonzoni opens the proceedings by introducing the first panel: “AI is often discussed from a technical perspective, but we should start questioning related topics, such as patents and the need to defend copyright.” The audiovisual world, in this sense, is called upon to ask precise and rational questions and explore ethical dilemmas while maintaining a secular approach that allows us to look at things objectively. Where does ethics end and the legal aspect begin? Experts analyze current European and international norms regarding copyright. Professor of Economics Law at UER, Valeria Falce, states: “Since 2019, there has been a European directive pushing to adapt copyright to new technologies. It’s a fundamental reward for human creative effort and needs to be protected and clarified even more now.” Taking an opposing view is the provocative intervention of Massimiliano Fuksas, who emphasizes that “copyright should be abolished. The concept of copying comes from the Catholic religion; our culture is obsessed with who copies us, but in reality, everyone copies everyone.”

However, concern about AI’s use of copyrighted works is not exclusively of an economic nature. Nicola Borrelli, Director of the General Directorate of Cinema and Audiovisual, and Paolo del Brocco, CEO of Rai Cinema, address the issue of “training material,” foreseeing fair compensation for the use of protected works but emphasizing that copyright also entails moral safeguards, which must be respected. Consideration must also be given to privacy and data security used by AI.

From the question of intellectual property, the discussion transitions to the impact on communications and information. In the second panel, discussions revolve around how AI represents a continuity for both production and dissemination of information. After all, there is no algorithm for truth, and AI could become a portal to parallel worlds, increasingly personalized and non-communicating with each other. Each user is satisfied within their tailored news bubble where misinformation reigns supreme, especially when considering the issue of “transparency and explainability.” In many cases, AI models are so complex that it’s difficult to explain how they arrive at certain conclusions. This lack of transparency can be problematic, especially if the future relies on a software that cannot account for its sources’ veracity.

The third panel deals with the effects of AI on employment and the labor market. The social and economic impact that AI could have risks creating or exacerbating divisions within our social fabric, the ramifications of which are not yet clear. One significant concern is technological dependency. Growing reliance on AI-based systems can lead to a loss of human skills and increased vulnerability in case of technological failures. The appeal to institutions focuses on education: often, less skilled workers are the most at risk of automation, so policies aimed at protection and development need to be implemented. Not Ludditism, but responsible progress that doesn’t leave behind the most vulnerable segments of the population and the workforce.

Demonstrations of good uses of these technologies were provided during the fourth panel, focusing on AI applications in art and culture. Testimonies of the current and future potential of various software in various cultural domains were presented, from architecture to painting, from fashion to audiovisual, from music to video games, and publishing.

AI can be used as a tool to assist artists in inspiration, generating new ideas, or developing innovative concepts; techniques like deep learning and natural language generation can pave the way for new forms of artistic expression. Furthermore, these technologies can be used to create immersive and personalized cultural experiences, taking into account not only the general public but also parts of the population that have not been well represented in the media until now. All this while reducing production costs, which have so far been a significant obstacle to the realization of so-called “niche” or experimental works.

The day concluded with the panel “Ethics and Philosophy of Technology.” There are many burning topics that could be mentioned, from technological crime and ethical hacking to mass surveillance and the potential for autonomous weapons, but, to stay within the audiovisual field, Luciano Floridi, Founding Director of the Digital Ethics Center and Professor in the Practice at Yale University, argues that the crux of the matter lies in the commercialization model, which, with the advent of AI, has seen a shift in power roles between consumer and producer. “It’s no longer a broadcaster-viewer process, but I, as a viewer, ask tomorrow’s Netflix to make a movie just for me.” Who determines what content is appropriate to produce and distribute? In short, the issue is one of control and responsibility: we cannot delegate our moral responsibility to a mechanism based on autonomous decisions made by software. The professor cites the example of a voice actor whose voice can be endlessly replicated by AI. Keira Knightley’s concerns about the creation of digital scans of actors and the power that AI has to treat them as immortal puppets within future audiovisual productions come to mind.

Gianluca Mauro, CEO of AI Academy, criticizes the approach followed by Silicon Valley so far, represented by Mark Zuckerberg’s aphorism “move fast and break things.” This lack of responsibility potentially becomes catastrophic when using a powerful tool like artificial intelligence. Especially if dangerously hidden flaws in the deepest code of the software produce discriminatory effects. AI algorithms can be influenced by the data they are trained on, leading to ethnic, gender, or social class discrimination in results. Mauro cites the example that pictures of women are much more likely to be categorized as “sexually suggestive content” by social media. He concludes, “We must remember that this is a young technology, not yet well understood, and we must be very careful when we deploy it. […] AI was born the day before yesterday; it’s normal to have problems, but these problems need to be resolved. We can’t expect them to resolve themselves. My final message is that we need to move more responsibly and not leave anyone behind.”

The conference provided a thorough overview of the issues related to AI in the cultural and artistic realm, with a particular focus on the central role of copyright and regulation. Experts emphasized the need to balance technological innovation with the protection of human creativity, ensuring that AI is a force to be regulated and not a threat to be protected against.

In his address, Deputy Prime Minister Matteo Salvini outlines the sentiment that moved the institutions to organize this event: “I urge you to accept the challenge but, at the same time, to use all possible and imaginable precautions. Because the beauty of imperfection, nuance, change of tone, color, and the emotion that the film operator puts into his work, no machine will ever replace it.”

Read the first part of our focus here.

Read the second part of our focus here.

Read the third part of our focus here.